Welcome back to my Power Platform AI series. In today’s post, I’m exploring a variety of tools within the Power Platform and Azure ecosystem to leverage secure calls to Azure AI models. I’ll be touching on Azure AI Studio, Power Automate, and Power Apps. It’s going to be fun 🙂

Pre-Requisites

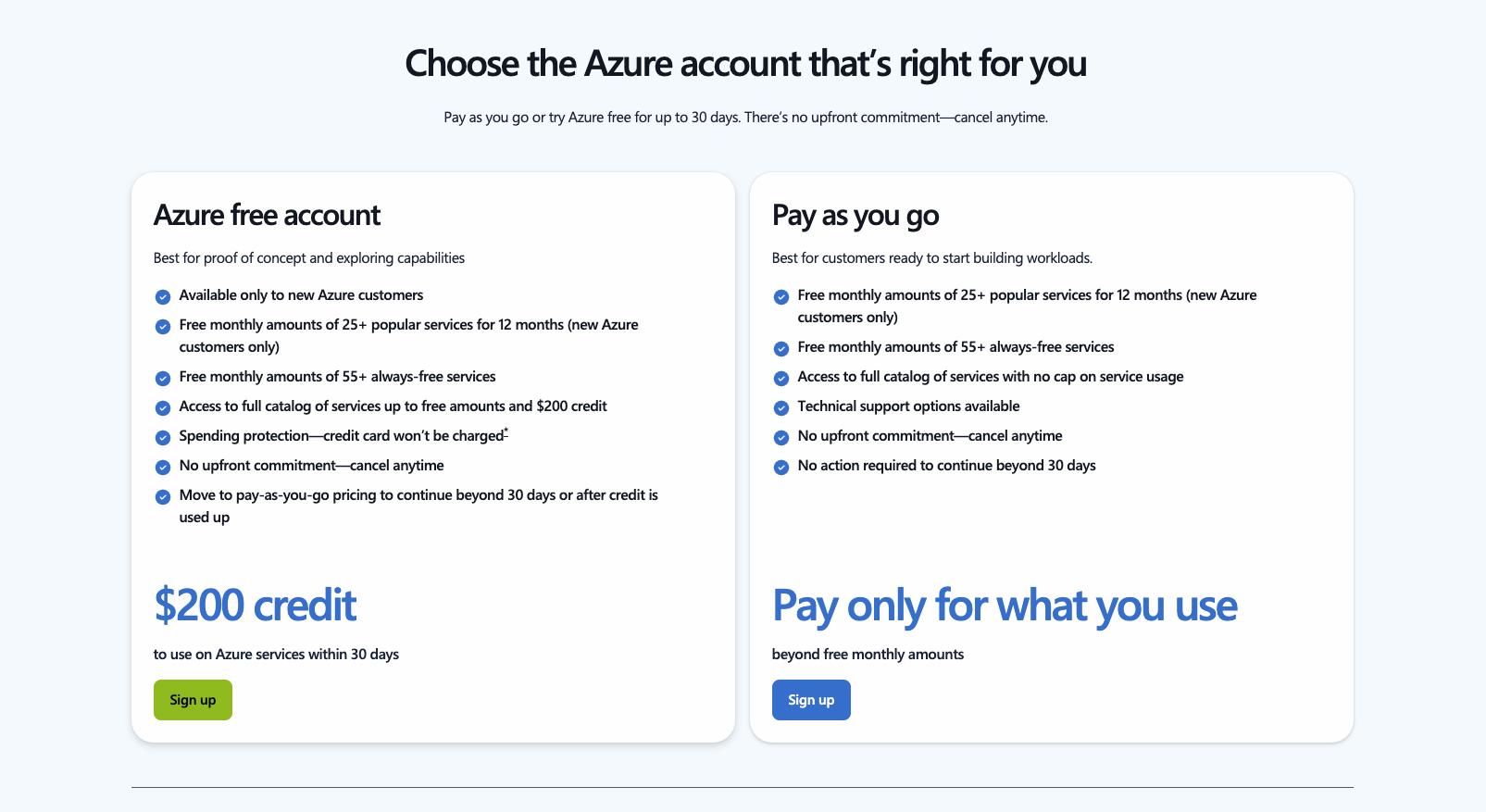

Before I jump into this experience, I do need to state that this does require a configured Azure subscription. Although the Azure AI usage may be relatively low, the slightly pricey bit rears its head with Azure API Manager. This is something I am hoping to touch on in my next post. If you don’t have an active Azure subscription, you can register for Azure and receive a $200 credit (with limit AI capabilities), and only if you don’t already have an existing tenant. Sadly, this does not work with the Developer Program.

Azure AI Studio

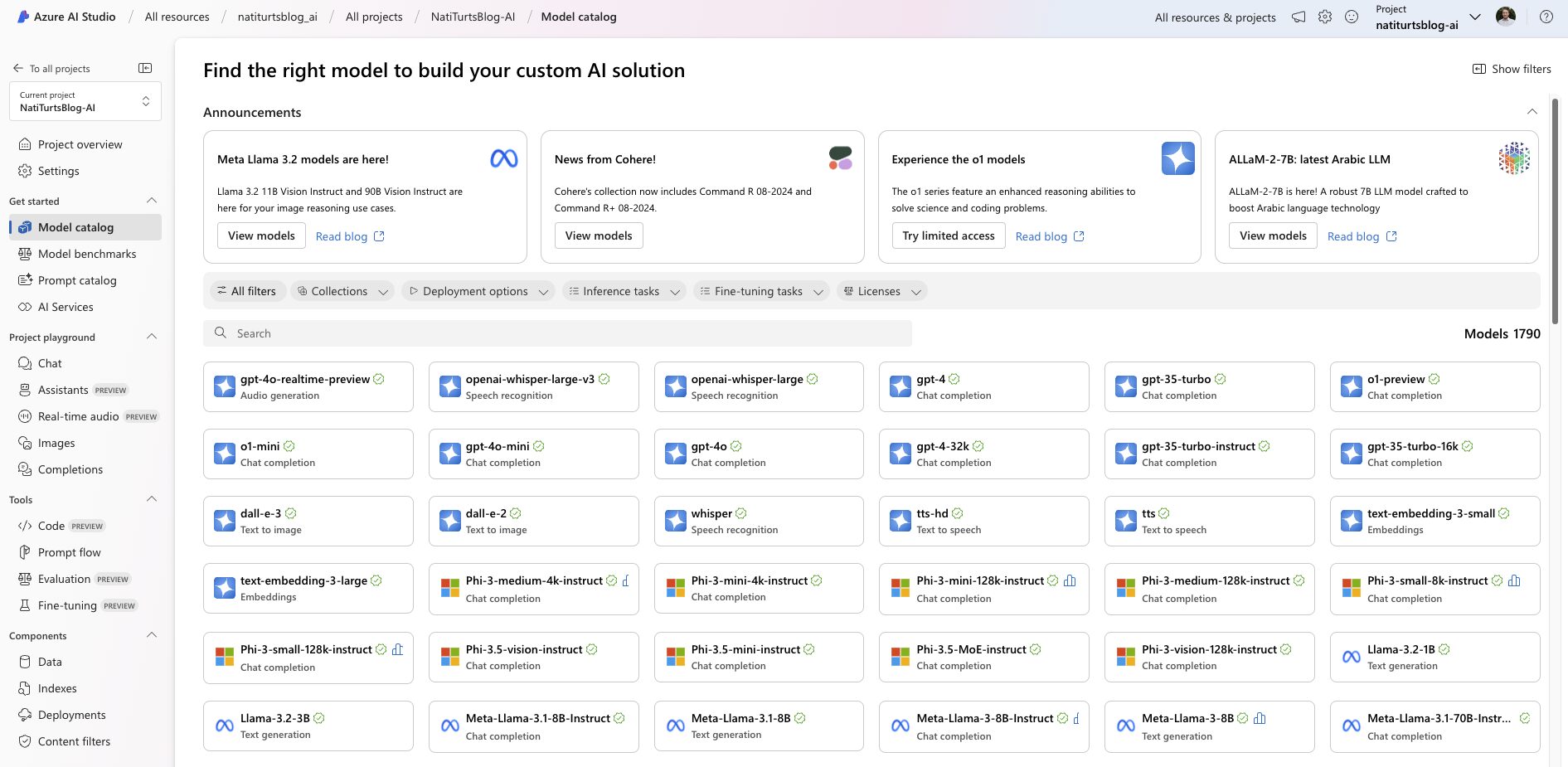

Azure AI Studio is the primary forefront of Azure AI Services. Similar to make.powerapps.com, Azure AI Studio allows developers to manage active AI projects as well as deploy, test, maintain, and feed various AI models. From a model side, Azure AI Studio does not just offer Microsoft models; you will find a variety of models you can deploy, ranging from Microsoft, Meta to Google. The costing around these models all varies, but the consumption plans are the same, i.e., you are charged on a per output token usage basis.

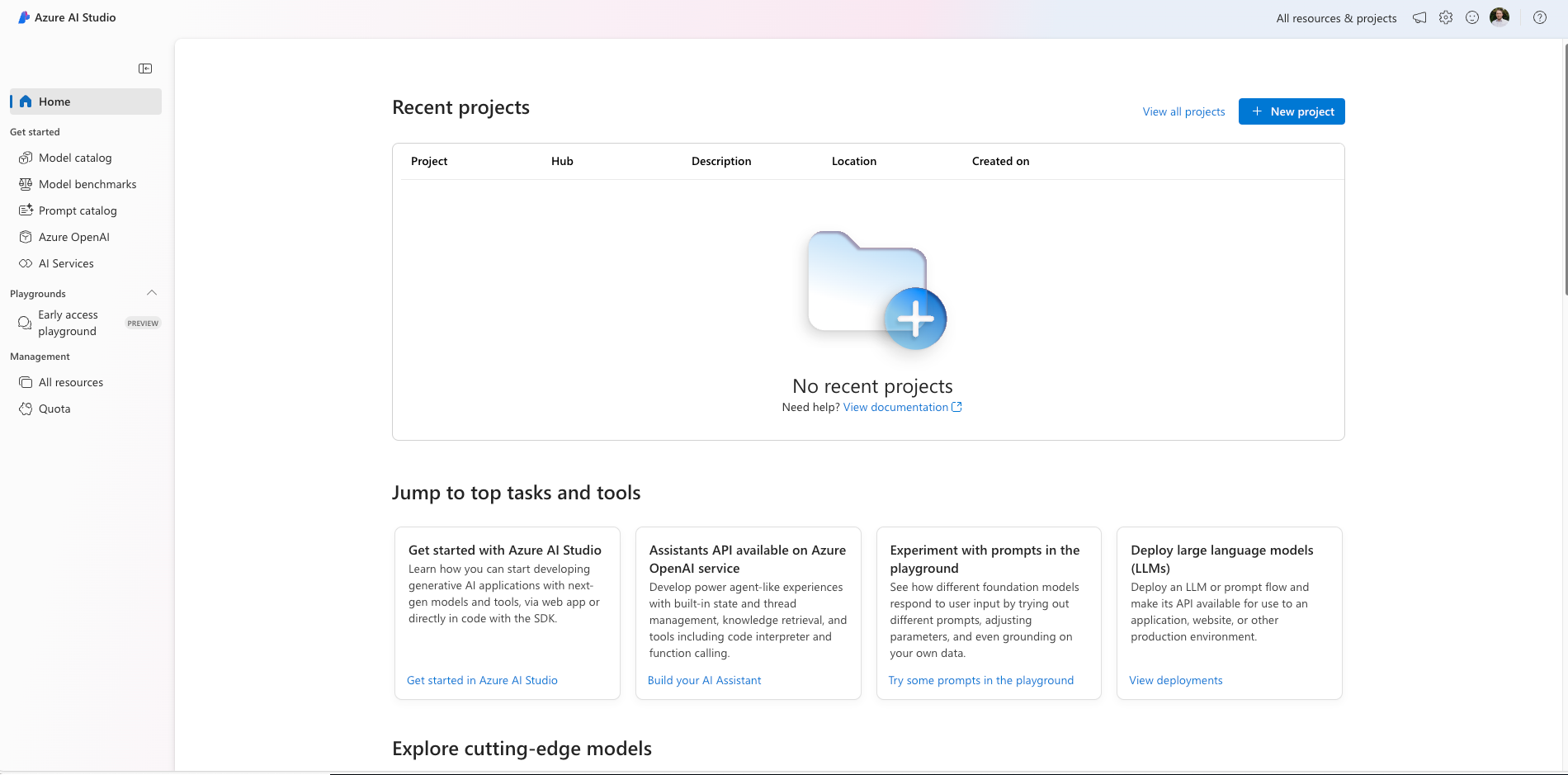

To get started, let’s head to ai.azure.com. You’ll land on the Azure AI Studio home page, where you get an overview of your Azure AI projects. If you’ve never setup an Azure AI project, you may need to create an AI Hub first.

A hub provides the hosting environment for your and other projects. It provides security, governance controls, and shared configurations all projects can use.

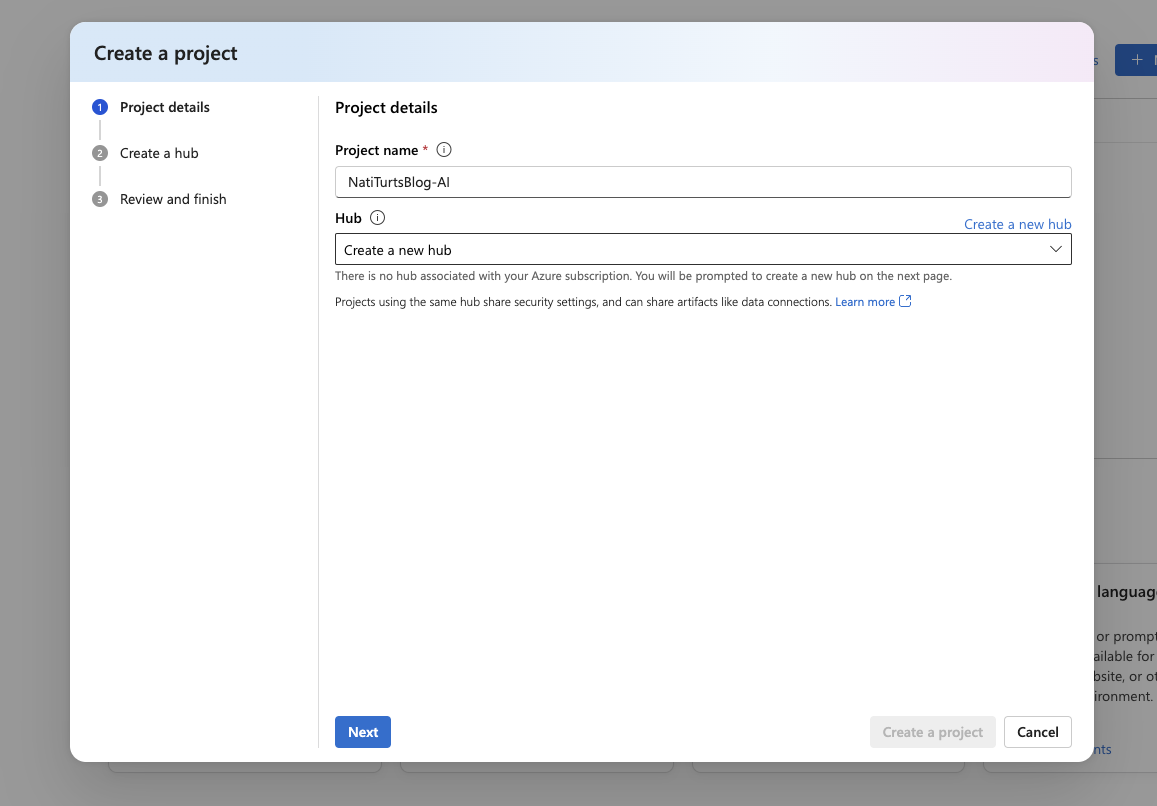

Creating a Project

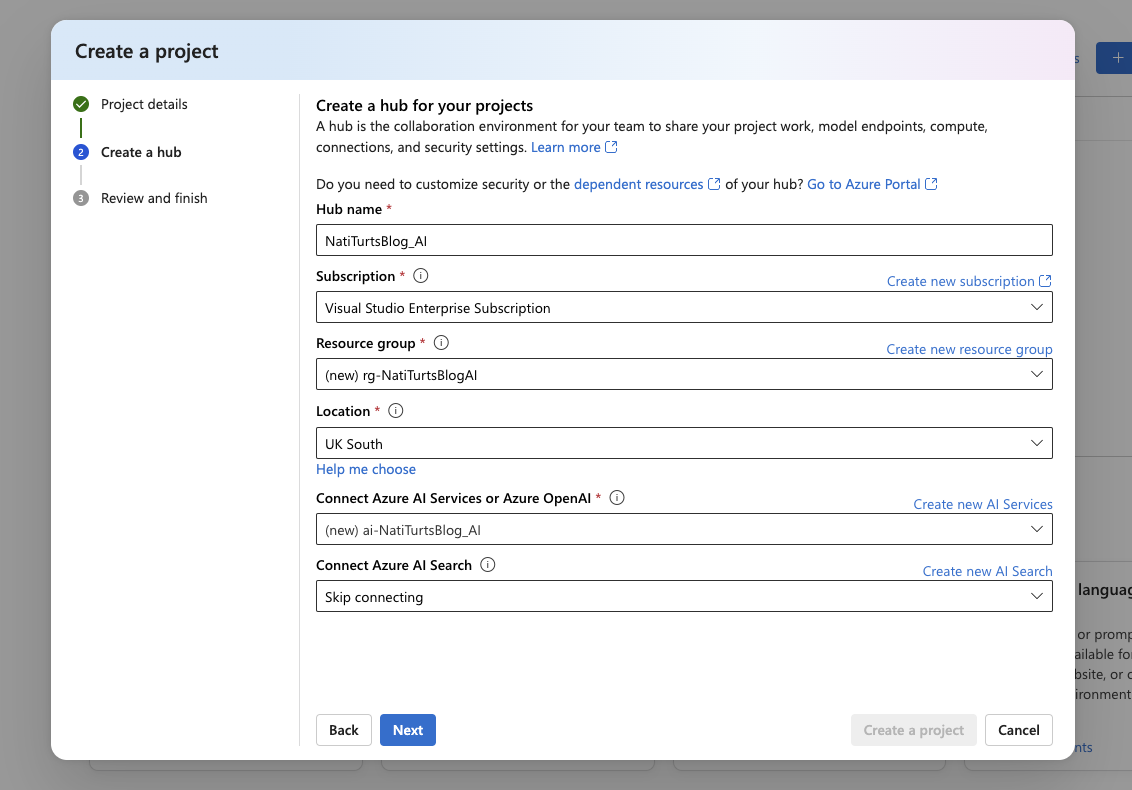

To start making use of Azure AI models, we need to head to ai.azure.com and create an Azure AI Studio project to house the deployments. When you create a new project, you will be prompted to give it a name and select an AI Hub or create a new Hub. Hubs allows the Azure AI Search service to query your data through a vector search when working with Azure OpenAI.

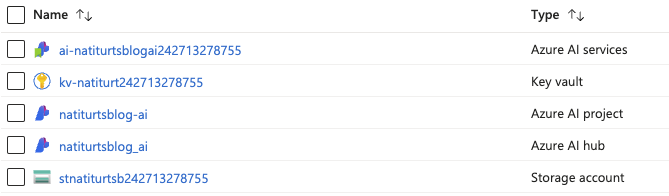

If you need to create a new hub, you will need to associate this with an active Azure subscription and resource group. Once you have a Hub setup, you will be able to proceed with creating a project. Doing so will create a variety of Azure AI-related services as well.

Once your project has been created, you will be able to find a variety of additional Azure services related to the project. This includes a designated key vault, an Azure AI service, and a blob storage account.

Deploy a Model

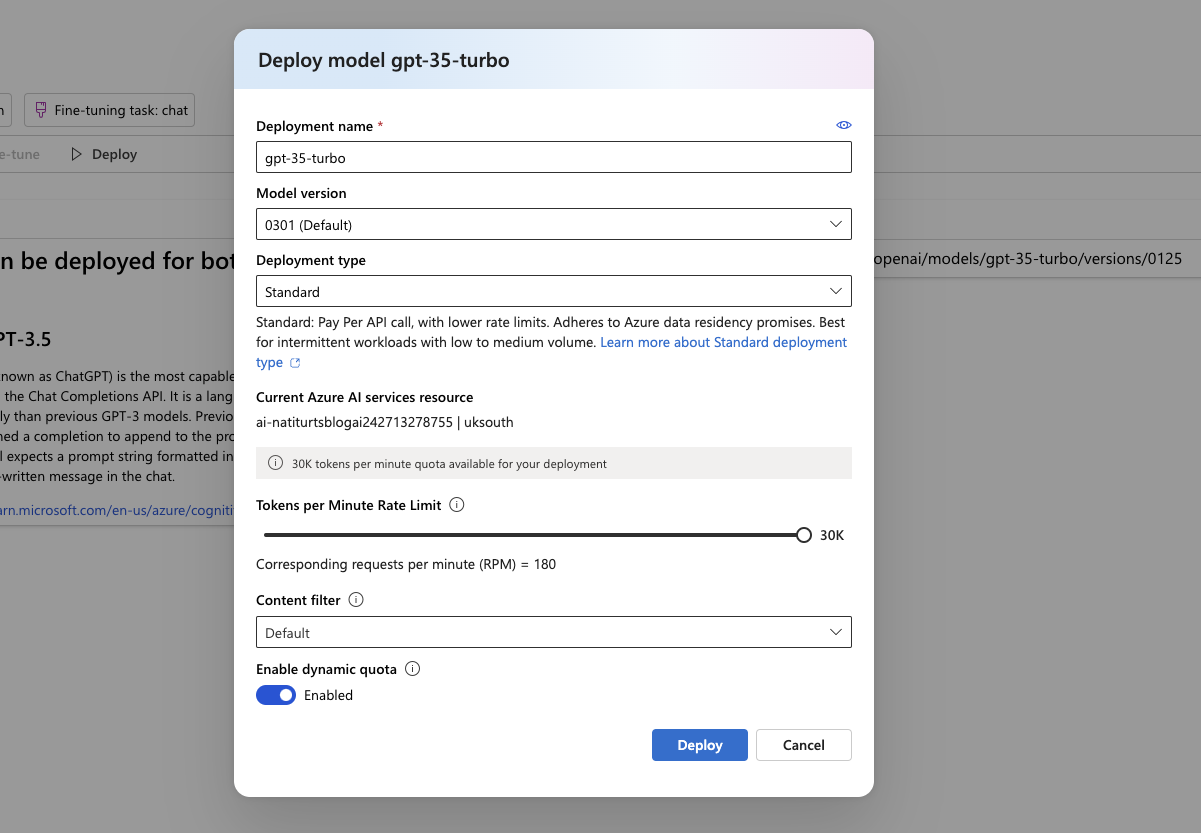

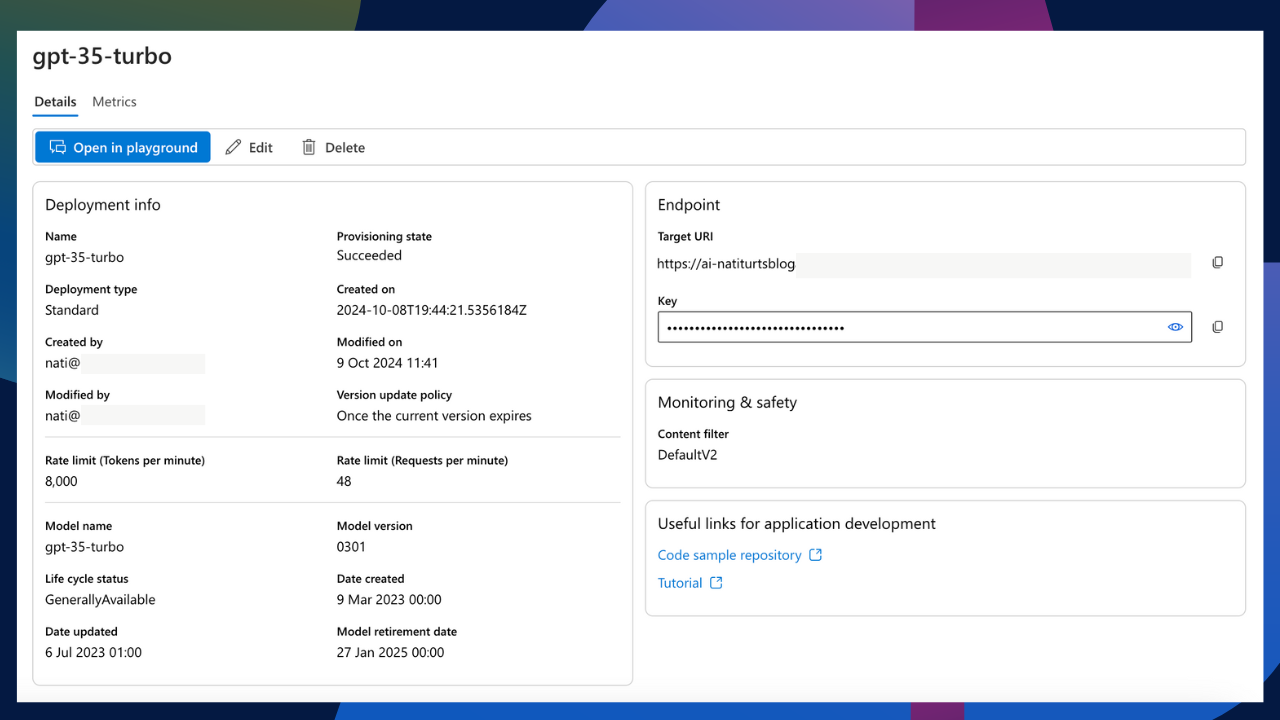

Now that we have an Azure AI project setup, we can deploy a model from the model catalogue. For this post’s purpose, I am going to deploy the Azure OpenAI gpt-35-turbo model. I’ll select the model and then select deploy. The reason I am selecting this model is due to its higher token rate limit of 30k TPM, where gpt-4o-mini is limited to 8k TPM.

If you need to customise the model a bit more, you can do so by selecting the customize button. This will allow you to change factors like the deployment type and what version you want to deploy, as well as assign a specific content filter if you have already set one up. Finally, we can deploy the model.

Model Playground

Great, we have a deployed model. You should have been redirected to your model details. On this page, you should be able to view your models endpoint URI as well as the authorisation key. I will be using these for this post; however, in my next post, I will be pointing them to an Azure API service that will allow me to control various keys based on different needs. This also provides further security should there ever be an issue with an authorisation key.

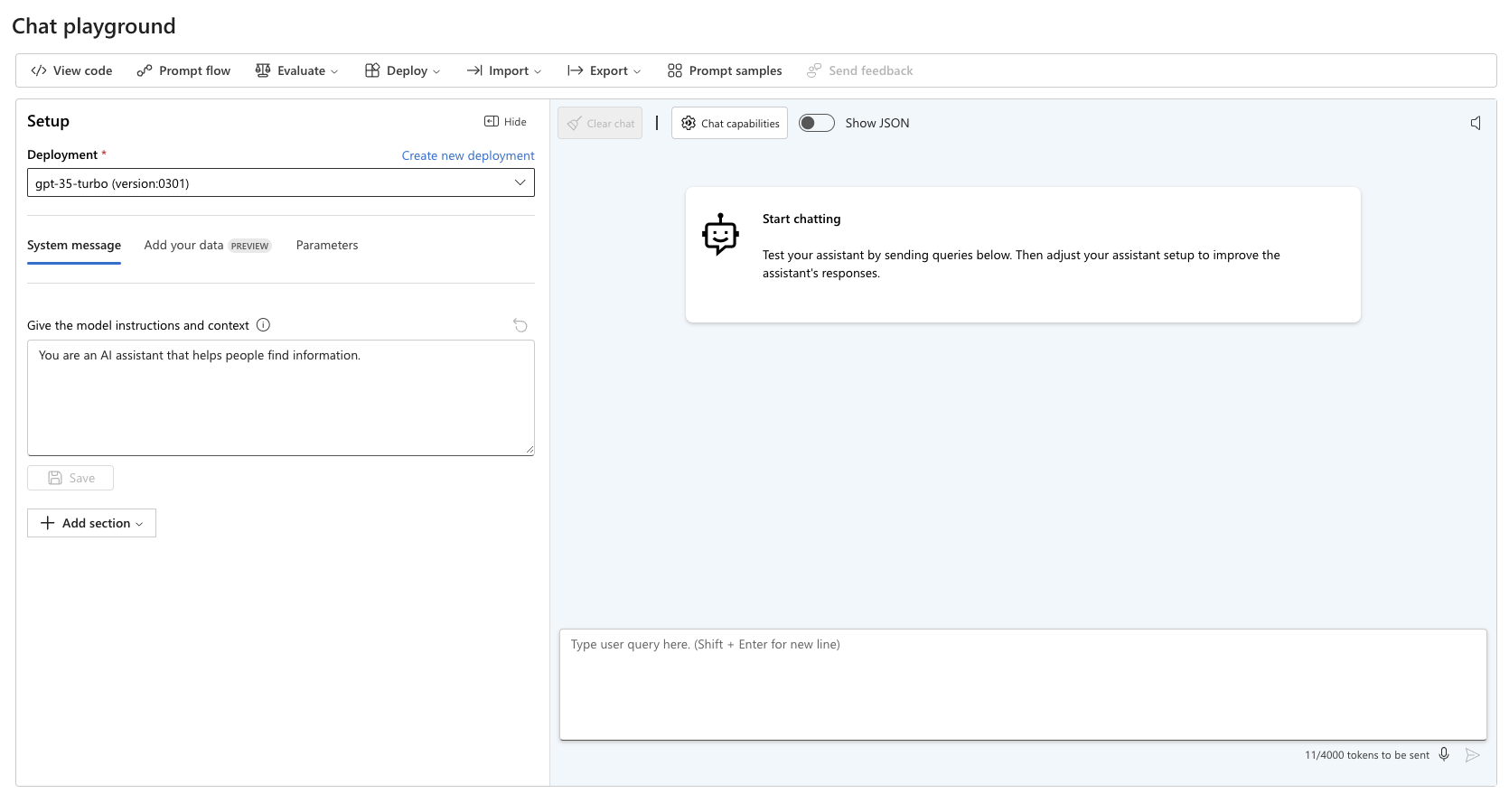

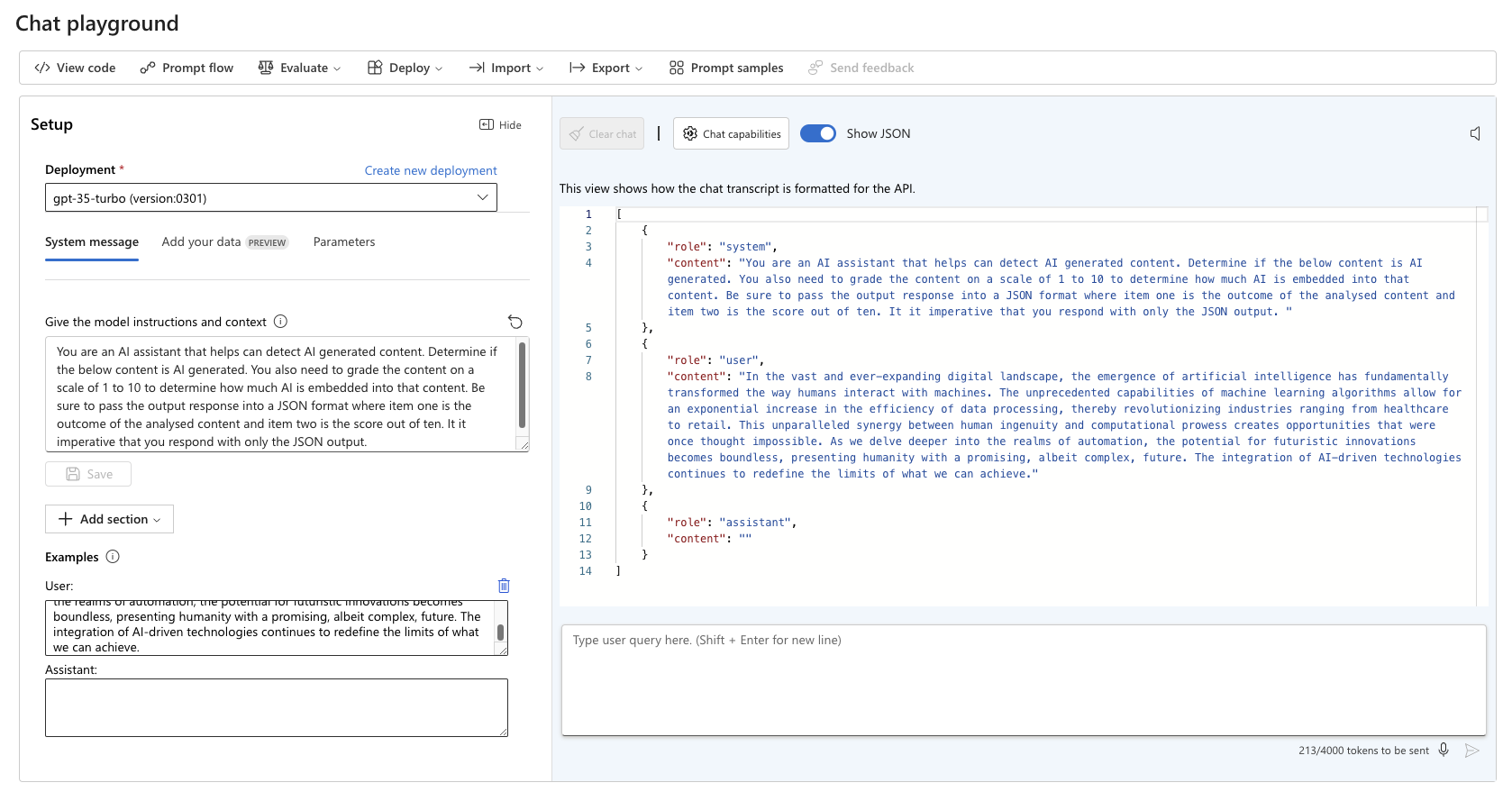

If I navigate to the chat playground, I should be able to interact with the model through chat completion. The chat playground allows you to experiment with various configurations that help you prompt the model but also assist the model in providing appropriate responses. Configuring sections and context in the playground does not affect the model in any way; it rather acts as a friendly user experience to configure these contexts and then export this into a pro-code format, such as JSON or Python.

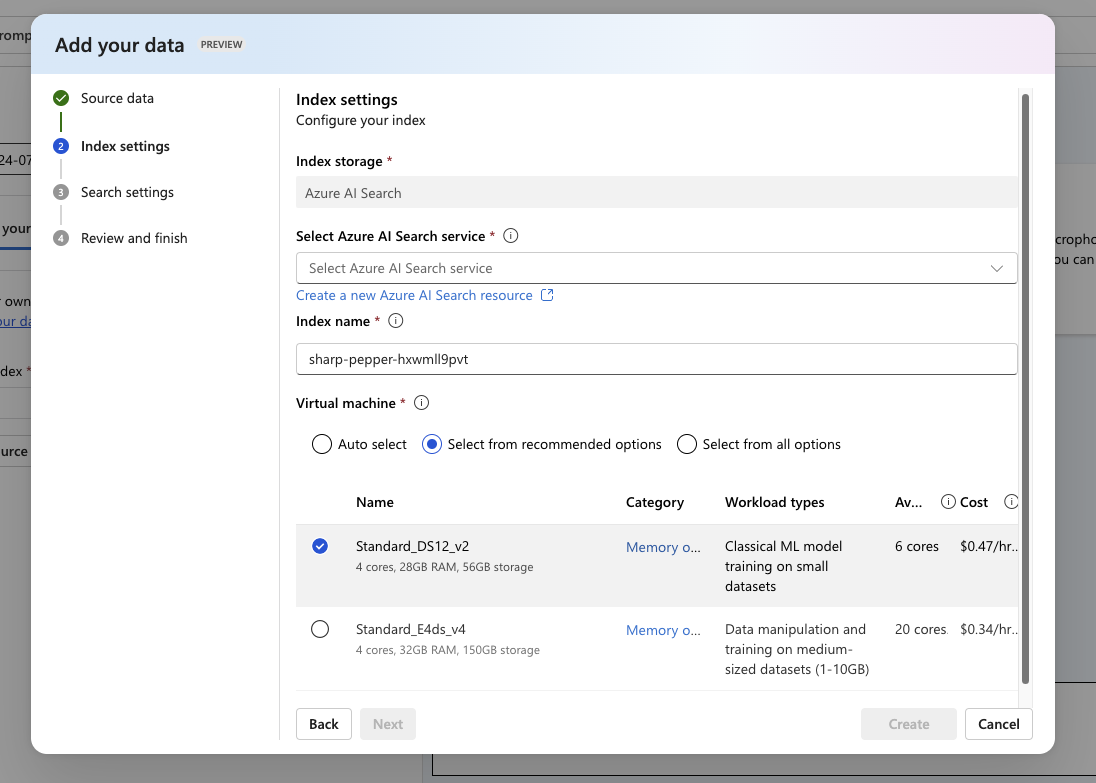

You can, however, add data to the model. Sources such as Azure AI Search, Blog Storage, or uploaded files can be made available to the project deployment and are then stored within your Azure subscription. Note that by adding a data source, you may need to configure an Azure AI Search service to search through this content. This requires computing/VMs and can become costly.

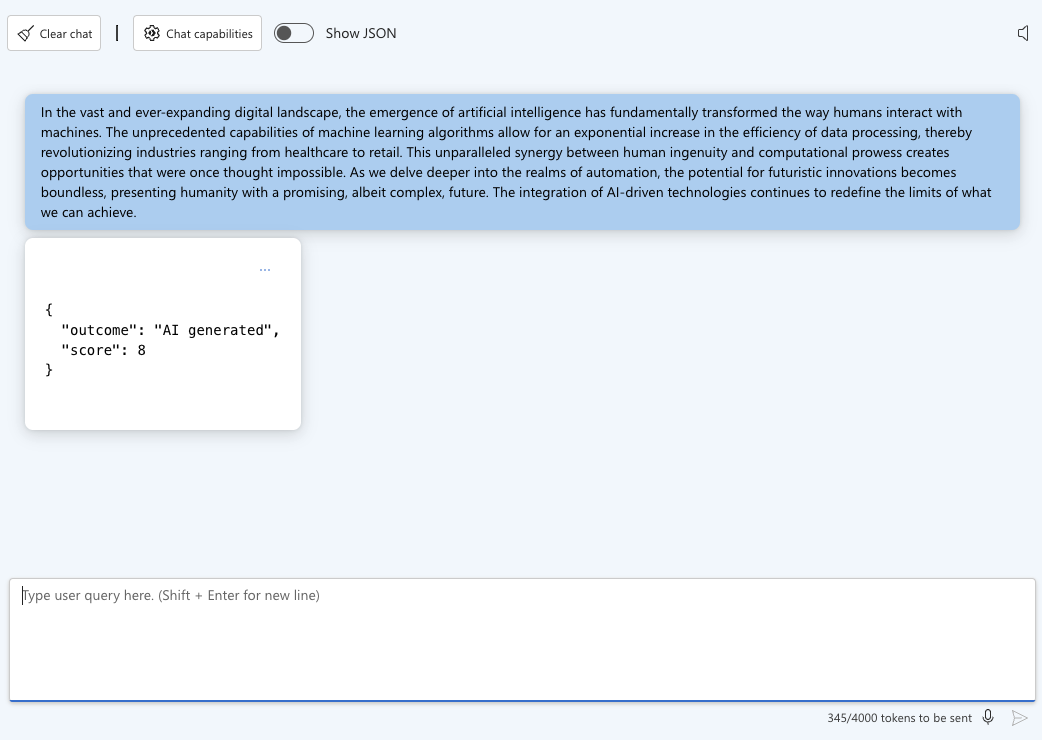

Let’s try to experiment now with the playground. I’ve drafted a prompt that I plan to use in a Power Automate flow later on. The prompt asks the model to analyse data that may be AI-generated and then grade it on an AI content scale of 1-10.

You are an AI assistant that helps can detect AI generated content. Determine if the below content is AI generated. You also need to grade the content on a scale of 1 to 10 to determine how much AI is embedded into that content. Be sure to pass the output response into a JSON format where item one is the outcome of the analysed content and item two is the score out of ten. It it imperative that you respond with only the JSON output.

Additionally, I have added a section to the setup to provide an example of some AI generated content:

In the vast and ever-expanding digital landscape, the emergence of artificial intelligence has fundamentally transformed the way humans interact with machines. The unprecedented capabilities of machine learning algorithms allow for an exponential increase in the efficiency of data processing, thereby revolutionizing industries ranging from healthcare to retail. This unparalleled synergy between human ingenuity and computational prowess creates opportunities that were once thought impossible. As we delve deeper into the realms of automation, the potential for futuristic innovations becomes boundless, presenting humanity with a promising, albeit complex, future. The integration of AI-driven technologies continues to redefine the limits of what we can achieve.

If I save the setup and opt to view the JSON within the chat, you can see that the playground has provided me with the structured JSON body I can use in an HTTP request.

Power Automate with Azure AI

So we have our Azure AI model deployed. Let’s plug it into Power Automate. Although there is a preview Microsoft connector called Azure OpenAI (preview), for the life of me, I cannot get this thing working, so we’re going the HTTP route today. If you have worked with this connector before, please drop me a DM; I need some assistance.

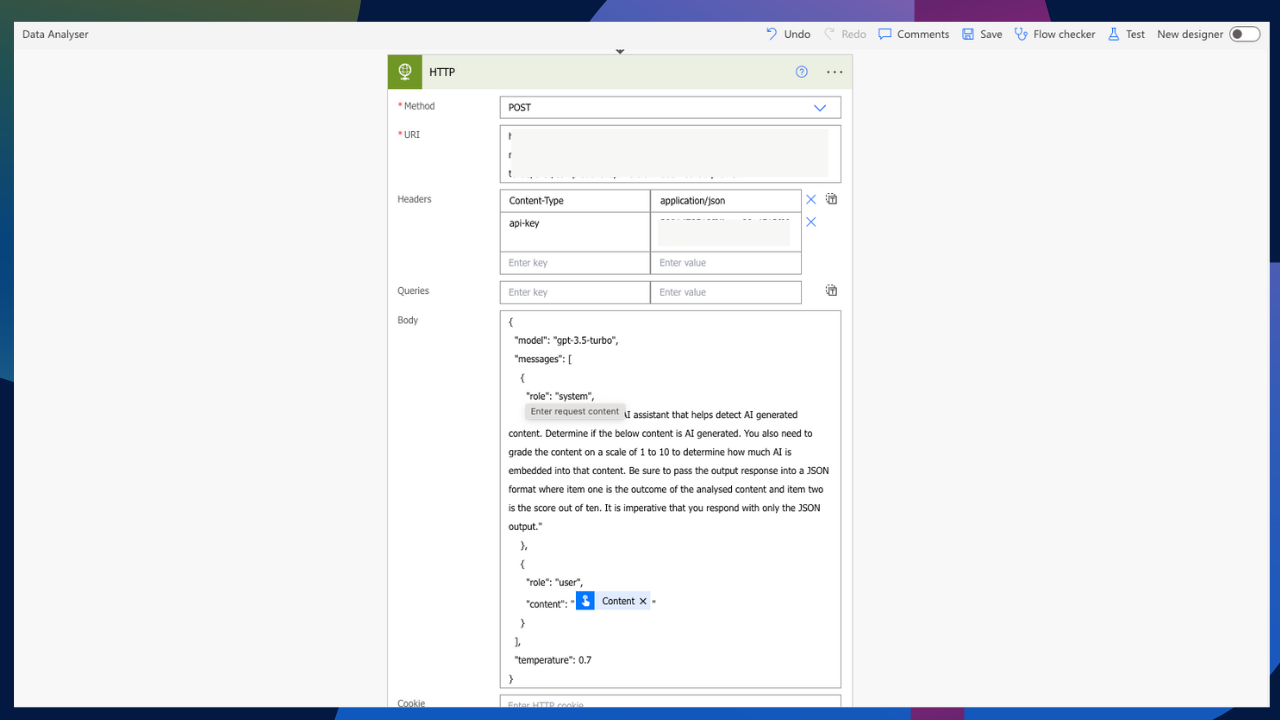

Within Power Automate, I am going to add a new HTTP request. Similar to my previous post on OpenAI, the pre-requisites to setting this up are very similar. For the HTTP method, I am using POST. I am then using my models URI, which can be found under your model deployment within Azure Studio.

I then need to add Content-Type and the API key to the request header. Finally, I can compose my body for the request. This will consist of the messages, roles, and content sent to the AI model.

{

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "You are an AI assistant that helps detect AI generated content. Determine if the below content is AI generated. You also need to grade the content on a scale of 1 to 10 to determine how much AI is embedded into that content. Be sure to pass the output response into a JSON format where item one is the outcome of the analysed content and item two is the score out of ten. It is imperative that you respond with only the JSON output."

},

{

"role": "user",

"content": "@{triggerBody()['text']}"

}

],

"temperature": 0.7

}After everything has been placed together, my HTTP request looks something like this:

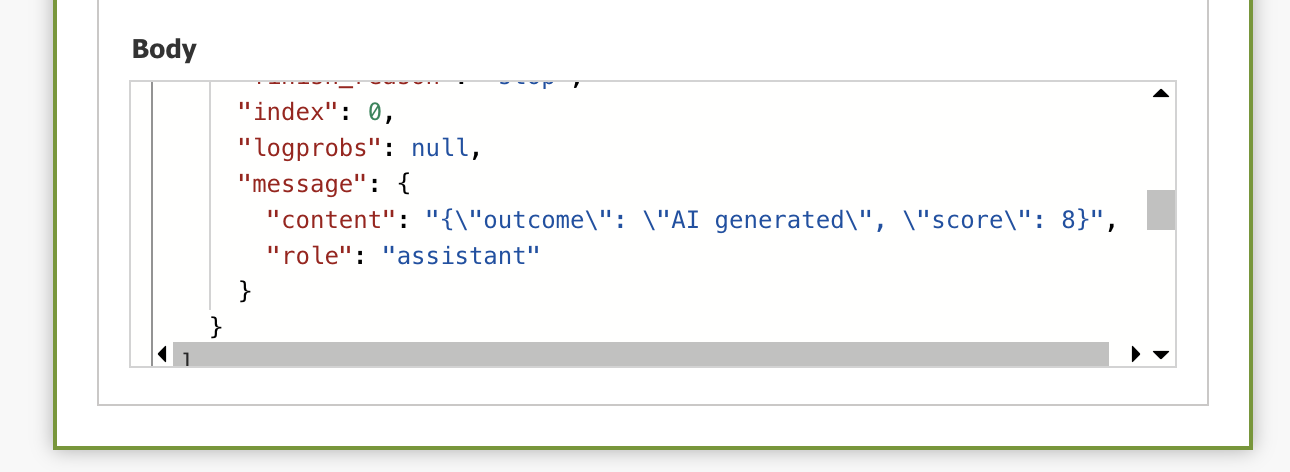

If I prompt the below, the flow queries the AI model and returns the response determining how much of the below is AI generated.

In the vast and ever-expanding digital landscape, the emergence of artificial intelligence has fundamentally transformed the way humans interact with machines. The unprecedented capabilities of machine learning algorithms allow for an exponential increase in the efficiency of data processing, thereby revolutionizing industries ranging from healthcare to retail. This unparalleled synergy between human ingenuity and computational prowess creates opportunities that were once thought impossible. As we delve deeper into the realms of automation, the potential for futuristic innovations becomes boundless, presenting humanity with a promising, albeit complex, future. The integration of AI-driven technologies continues to redefine the limits of what we can achieve.

Costings

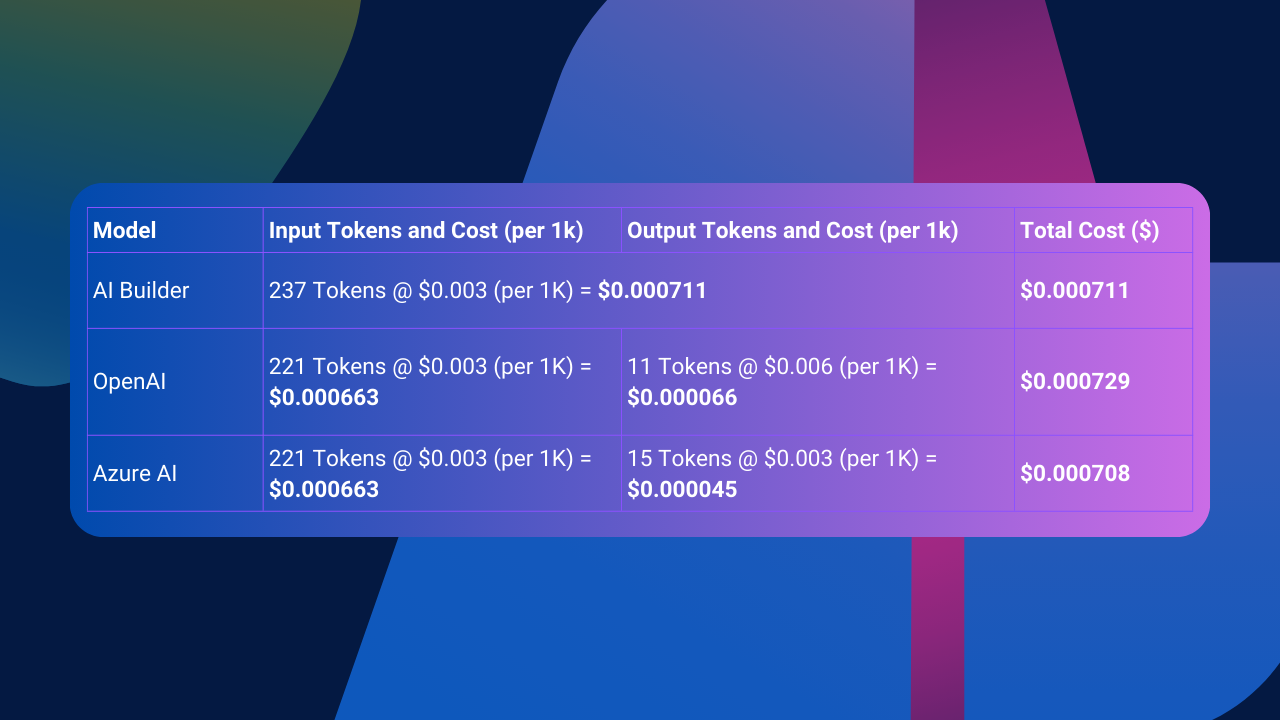

In my previous post, I weighed up the costings between using Power Platform AI Builder and an OpenAI endpoint. I mistakenly mentioned that OpenAI only billed for input tokens. After doing some calculations for this post, I can say I was mistaken, and Open AI does bill for output tokens as well.

I have created a new AI Builder prompt and adjusted my OpenAI flow to both use the gpt-35-turbo model, and I have also included the same prompt in both of them.

The prompt itself totals 221 input tokens. To clearly view the token usage per platform, I have placed them into the below tables.

Although the pricing margins are very minimal, it’s always good to have a holistic view of the potential costings you may incur when deciding to use one of these models.

Conclusion

Although this was a relatively similar post to my OpenAI post, it was insightful learning my way around the various Azure services, more specifically, Azure OpenAI. I also learnt I made a mistake in my previous post regarding the OpenAI costs.

During this post, I faced a variety of issues:

Token Limits

Originally, I had deployed a gpt-4o-mini model in Azure AI Studio. The use case I had was to compare a custom prompt that extracted data fields from business cards against the AI Builder model that does the same. Just by uploading an image to the gpt-4o-mini model, I already reached my maximum token usage, whereas AI Builder already offers this model prebuilt and OpenAI had no issues with performing this task.

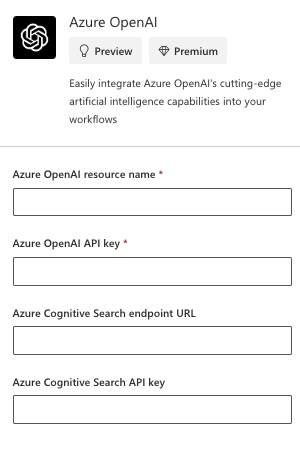

Azure OpenAI Connector

Microsoft has an Azure OpenAI (preview) connector that can be used in Power Automate and Power Apps. I tried various configurations over a few days to get this connection working and was constantly faced with the same error.

All in all, this was an exciting experience, and I am looking forward to exploring wrapping this in a custom connector with a bit of Azure API management in the next post.

Until then, I hope you enjoyed this post and found it insightful. If you did, feel free to buy me a coffee to help support me and my blog. Buy me a coffee.